How to Get Google to Crawl My Website Again

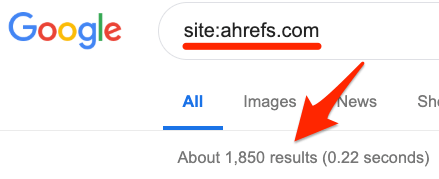

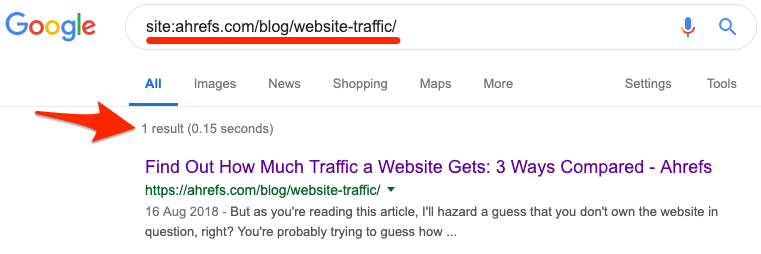

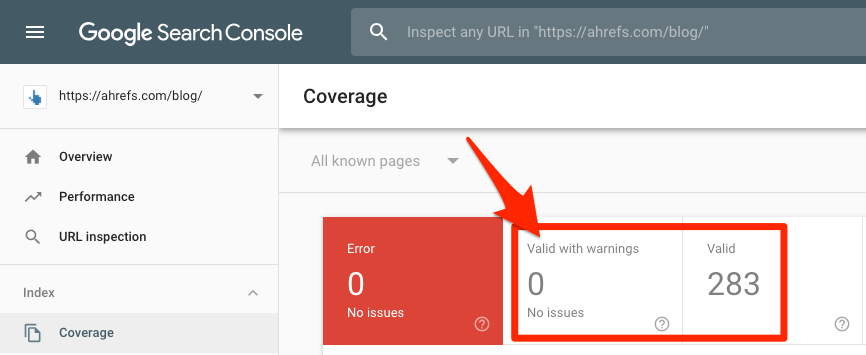

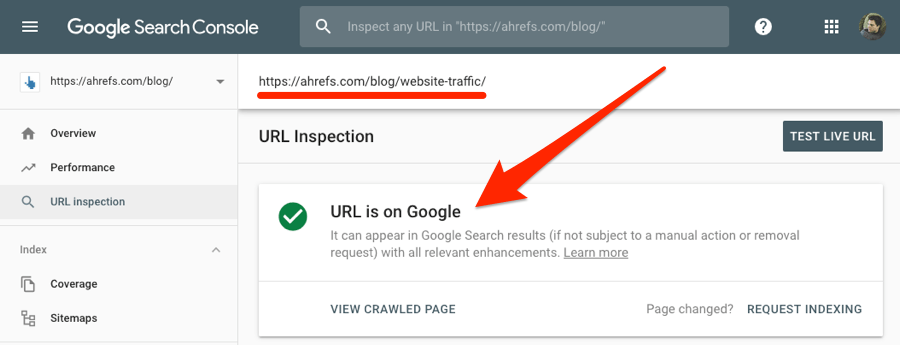

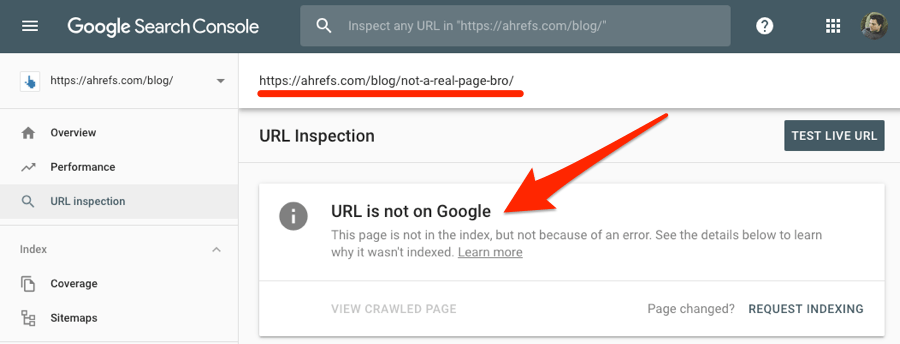

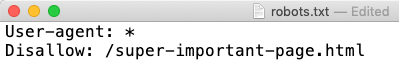

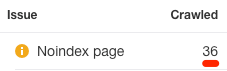

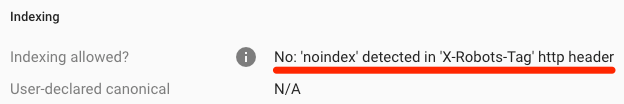

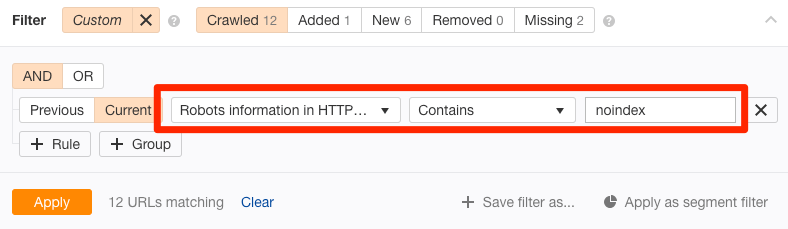

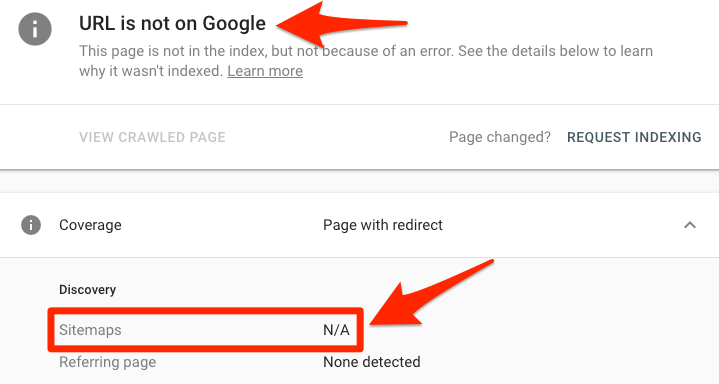

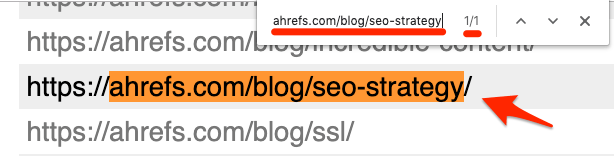

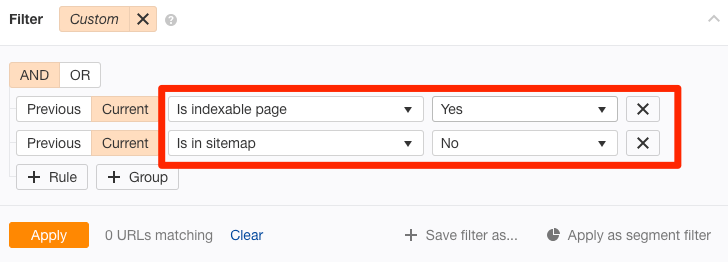

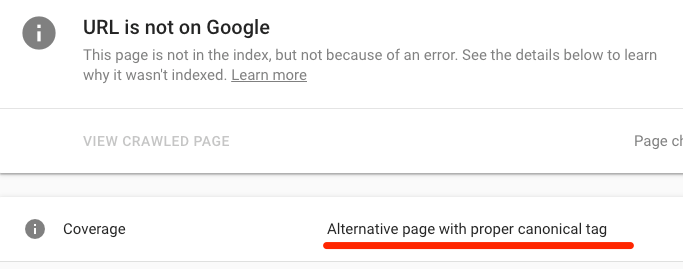

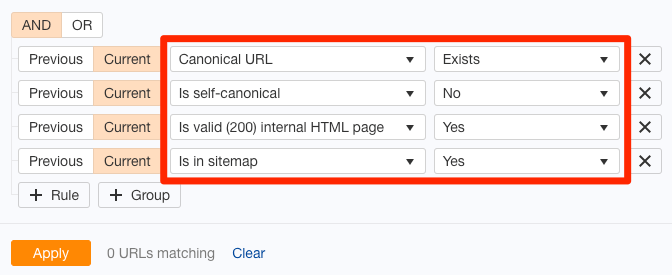

If Google doesn't index your website, and so yous're pretty much invisible. Y'all won't show upward for any search queries, and yous won't get any organic traffic whatsoever. Cypher. Nada. Zippo. Given that you're here, I'1000 guessing this isn't news to you. So permit's get direct down to business organisation. This article teaches you how to fix whatever of these three problems: But showtime, let's make sure we're on the same folio and fully-understand this indexing malarkey. New to SEO? Check out our Google discovers new web pages past crawling the web, and then they add those pages to their index. They do this using a web spider chosen Googlebot. Confused? Let'southward ascertain a few fundamental terms. Here's a video from Google that explains the procedure in more detail: https://www.youtube.com/watch?5=BNHR6IQJGZs When you lot Google something, you're asking Google to return all relevant pages from their index. Considering there are frequently millions of pages that fit the nib, Google's ranking algorithm does its best to sort the pages so that you encounter the best and most relevant results get-go. The critical signal I'm making here is that indexing and ranking are two different things. Indexing is showing up for the race; ranking is winning. You can't win without showing up for the race in the kickoff place. Become to Google, and so search for This number shows roughly how many of your pages Google has indexed. If yous want to cheque the index status of a specific URL, use the same No results will show up if the page isn't indexed. Now, it's worth noting that if yous're a Google Search Panel user, you tin can use the Coverage report to get a more than accurate insight into the index status of your website. Only go to: Google Search Console > Index > Coverage Await at the number of valid pages (with and without warnings). If these two numbers full annihilation but zero, then Google has at to the lowest degree some of the pages on your website indexed. If not, and then you have a severe trouble because none of your web pages are indexed. Sidenote. Non a Google Search Console user? Sign upwards. Information technology's free. Everyone who runs a website and cares nigh getting traffic from Google should use Google Search Console. It'southward that important. You can also utilise Search Console to bank check whether a specific page is indexed. To exercise that, paste the URL into the URL Inspection tool. If that page is indexed, it'll say "URL is on Google." If the page isn't indexed, you'll see the words "URL is not on Google." Institute that your website or web page isn't indexed in Google? Effort this: This process is practiced practise when yous publish a new post or page. You're finer telling Google that you've added something new to your site and that they should have a look at it. Even so, requesting indexing is unlikely to solve underlying problems preventing Google from indexing one-time pages. If that'southward the case, follow the checklist below to diagnose and ready the problem. Here are some quick links to each tactic—in case yous've already tried some: Is Google non indexing your entire website? It could be due to a crawl block in something called a robots.txt file. To cheque for this issue, go to yourdomain.com/robots.txt. Look for either of these two snippets of code: Both of these tell Googlebot that they're non allowed to clamber whatever pages on your site. To fix the consequence, remove them. It's that elementary. A crawl block in robots.txt could likewise be the culprit if Google isn't indexing a single spider web page. To check if this is the example, paste the URL into the URL inspection tool in Google Search Console. Click on the Coverage block to reveal more details, then look for the "Crawl immune? No: blocked by robots.txt" fault. This indicates that the page is blocked in robots.txt. If that's the example, recheck your robots.txt file for whatever "disallow" rules relating to the page or related subsection. Remove where necessary. Google won't alphabetize pages if y'all tell them not to. This is useful for keeping some web pages individual. There are two means to do it: Pages with either of these meta tags in their This is a meta robots tag, and it tells search engines whether they tin or tin't alphabetize the page. Sidenote. The key part is the "noindex" value. If you see that, then the page is gear up to noindex. To notice all pages with a noindex meta tag on your site, run a crawl with Ahrefs' Site Audit. Go to the Indexability report. Look for "Noindex page" warnings. Click through to see all afflicted pages. Remove the noindex meta tag from any pages where it doesn't belong. Crawlers also respect the X‑Robots-Tag HTTP response header. You tin implement this using a server-side scripting language like PHP, or in your .htaccess file, or by changing your server configuration. The URL inspection tool in Search Console tells you whether Google is blocked from crawling a page because of this header. But enter your URL, then await for the "Indexing immune? No: 'noindex' detected in 'X‑Robots-Tag' http header" If you want to cheque for this issue beyond your site, run a crawl in Ahrefs' Site Audit tool, then utilize the "Robots information in HTTP header" filter in the Page Explorer: Tell your programmer to exclude pages you want indexing from returning this header. Recommended reading: Robots meta tag and X‑Robots-Tag HTTP header specifications A sitemap tells Google which pages on your site are important, and which aren't. It may also give some guidance on how often they should be re-crawled. Google should exist able to find pages on your website regardless of whether they're in your sitemap, but it's still good practice to include them. Later all, at that place'south no point making Google'south life hard. To check if a page is in your sitemap, use the URL inspection tool in Search Panel. If you see the "URL is not on Google" error and "Sitemap: N/A," then it isn't in your sitemap or indexed. Not using Search Panel? Head to your sitemap URL—ordinarily, yourdomain.com/sitemap.xml—and search for the page. Or, if y'all desire to find all the crawlable and indexable pages that aren't in your sitemap, run a clamber in Ahrefs' Site Audit. Go to Page Explorer and utilize these filters: These pages should be in your sitemap, then add them. Once done, let Google know that you've updated your sitemap by pinging this URL: Supervene upon that last office with your sitemap URL. Y'all should then run into something similar this: That should speed up Google's indexing of the page. A canonical tag tells Google which is the preferred version of a folio. It looks something like this: Most pages either take no canonical tag, or what's called a self-referencing canonical tag. That tells Google the page itself is the preferred and probably the only version. In other words, y'all desire this page to be indexed. Only if your page has a rogue approved tag, then it could be telling Google virtually a preferred version of this page that doesn't exist. In which case, your folio won't become indexed. To check for a canonical, apply Google's URL inspection tool. Y'all'll see an "Alternating folio with canonical tag" alert if the canonical points to some other folio. If this shouldn't be at that place, and you want to alphabetize the folio, remove the canonical tag. Important Canonical tags aren't always bad. Most pages with these tags volition have them for a reason. If you see that your page has a approved set up, so bank check the canonical folio. If this is indeed the preferred version of the folio, and there'southward no need to index the page in question too, so the canonical tag should stay. If you want a quick way to find rogue canonical tags across your unabridged site, run a crawl in Ahrefs' Site Audit tool. Get to the Page Explorer. Use these settings: This looks for pages in your sitemap with non-self-referencing canonical tags. Because yous almost certainly want to index the pages in your sitemap, you should investigate further if this filter returns any results. It's highly likely that these pages either accept a rogue approved or shouldn't be in your sitemap in the get-go place. Orphan pages are those without internal links pointing to them. Because Google discovers new content by crawling the web, they're unable to discover orphan pages through that procedure. Website visitors won't exist able to find them either. To check for orphan pages, clamber your site with Ahrefs' Site Inspect. Next, check the Links written report for "Orphan page (has no incoming internal links)" errors: This shows all pages that are both indexable and nowadays in your sitemap, nonetheless have no internal links pointing to them. IMPORTANT This process only works when two things are truthful: Not confident that all the pages you want to be indexed are in your sitemap? Try this: Any URLs not found during the clamber are orphan pages. You tin can ready orphan pages in one of two ways: Nofollow links are links with a rel="nofollow" tag. They prevent the transfer of PageRank to the destination URL. Google also doesn't clamber nofollow links. Hither's what Google says about the matter: Essentially, using nofollow causes us to driblet the target links from our overall graph of the web. However, the target pages may notwithstanding appear in our index if other sites link to them without using nofollow, or if the URLs are submitted to Google in a Sitemap. In short, you lot should make certain that all internal links to indexable pages are followed. To practice this, utilize Ahrefs' Site Audit tool to clamber your site. Cheque the Links report for indexable pages with "Folio has nofollow incoming internal links simply" errors: Remove the nofollow tag from these internal links, assuming that yous want Google to index the page. If non, either delete the page or noindex it. Recommended reading: What Is a Nofollow Link? Everything You Demand to Know (No Jargon!) Google discovers new content by crawling your website. If you neglect to internally link to the page in question and so they may non exist able to find information technology. One easy solution to this problem is to add some internal links to the page. You can do that from any other web page that Google tin can crawl and alphabetize. However, if you desire Google to alphabetize the page as fast every bit possible, it makes sense to do so from 1 of your more "powerful" pages. Why? Because Google is likely to recrawl such pages faster than less of import pages. To practise this, head over to Ahrefs' Site Explorer, enter your domain, then visit the Best by links report. This shows all the pages on your website sorted past URL Rating (UR). In other words, it shows the about authoritative pages first. Skim this listing and wait for relevant pages from which to add together internal links to the page in question. For case, if we were looking to add an internal link to our invitee posting guide, our link building guide would probably offer a relevant place from which to do then. And that page simply so happens to exist the 11th most authoritative folio on our blog: Google will then see and follow that link next time they recrawl the page. pro tip Paste the page from which you added the internal link into Google's URL inspection tool. Hit the "Asking indexing" button to let Google know that something on the page has changed and that they should recrawl information technology as before long as possible. This may speed up the process of them discovering the internal link and consequently, the folio you desire indexing. Google is unlikely to alphabetize depression-quality pages because they hold no value for its users. Here's what Google's John Mueller said well-nigh indexing in 2018: We never index all known URLs, that's pretty normal. I'd focus on making the site awesome and inspiring, then things usually work out amend. — 🍌 John 🍌 (@JohnMu) Jan three, 2018 He implies that if you want Google to index your website or web folio, it needs to be "awesome and inspiring." If yous've ruled out technical problems for the lack of indexing, then a lack of value could be the culprit. For that reason, information technology's worth reviewing the page with fresh optics and asking yourself: Is this page genuinely valuable? Would a user observe value in this page if they clicked on it from the search results? If the answer is no to either of those questions, then yous need to amend your content. You can find more potentially depression-quality pages that aren't indexed using Ahrefs' Site Audit tool and URL Profiler. To do that, go to Folio Explorer in Ahrefs' Site Inspect and utilize these settings: This will return "thin" pages that are indexable and currently get no organic traffic. In other words, there's a decent run a risk they aren't indexed. Export the study, then paste all the URLs into URL Profiler and run a Google Indexation cheque. Of import It's recommended to use proxies if you lot're doing this for lots of pages (i.due east., over 100). Otherwise, you run the risk of your IP getting banned by Google. If you lot can't do that, then some other culling is to search Google for a "free bulk Google indexation checker." There are a few of these tools around, simply most of them are limited to <25 pages at a time. Check whatsoever non-indexed pages for quality issues. Improve where necessary, so request reindexing in Google Search Console. You lot should besides aim to set issues with duplicate content. Google is unlikely to index duplicate or well-nigh-duplicate pages. Use the Duplicate content report in Site Inspect to check for these bug. Having too many low-quality pages on your website serves simply to waste material crawl budget. Hither's what Google says on the thing: Wasting server resources on [low-value-add pages] will drain clamber activity from pages that do actually take value, which may cause a significant delay in discovering corking content on a site. Retrieve of it like a teacher grading essays, one of which is yours. If they take ten essays to form, they're going to go to yours quite quickly. If they have a hundred, information technology'll have them a bit longer. If they have thousands, their workload is too high, and they may never get around to grading your essay. Google does state that "crawl upkeep […] is not something near publishers take to worry about," and that "if a site has fewer than a few thousand URLs, most of the fourth dimension it will be crawled efficiently." Nevertheless, removing low-quality pages from your website is never a bad thing. It can simply accept a positive result on crawl upkeep. You lot can utilize our content audit template to find potentially low-quality and irrelevant pages that can exist deleted. Backlinks tell Google that a web page is of import. Afterwards all, if someone is linking to it, then it must concur some value. These are pages that Google wants to index. For full transparency, Google doesn't only index web pages with backlinks. There are plenty (billions) of indexed pages with no backlinks. However, considering Google sees pages with high-quality links as more important, they're probable to clamber—and re-crawl—such pages faster than those without. That leads to faster indexing. Nosotros take plenty of resources on building loftier-quality backlinks on the web log. Accept a wait at a few of the guides beneath. Having your website or web page indexed in Google doesn't equate to rankings or traffic. They're 2 different things. Indexing ways that Google is aware of your website. It doesn't mean they're going to rank information technology for whatsoever relevant and worthwhile queries. That's where SEO comes in—the art of optimizing your spider web pages to rank for specific queries. In short, SEO involves: Hither's a video to become you started with SEO: https://world wide web.youtube.com/lookout?v=DvwS7cV9GmQ … and some articles: There are only ii possible reasons why Google isn't indexing your website or spider web page: It's entirely possible that both of those issues be. However, I would say that technical bug are far more common. Technical issues can also lead to the auto-generation of indexable low-quality content (due east.g., problems with faceted navigation). That isn't expert. Still, running through the checklist above should solve the indexation effect nine times out of ten. Only call up that indexing ≠ ranking. SEO is still vital if you want to rank for whatever worthwhile search queries and concenter a abiding stream of organic traffic.

site:yourwebsite.com

site:yourwebsite.com/spider web-folio-slug operator.

1) Remove crawl blocks in your robots.txt file

User-amanuensis: Googlebot Disallow: /

User-amanuensis: * Disallow: /

2) Remove rogue noindex tags

Method 1: meta tag

<caput> section won't be indexed by Google:<meta proper name="robots" content="noindex">

<meta proper name="googlebot" content="noindex">

Method 2: X‑Robots-Tag

3) Include the page in your sitemap

http://www.google.com/ping?sitemap=http://yourwebsite.com/sitemap_url.xml

iv) Remove rogue canonical tags

<link rel="canonical" href="/page.html/">

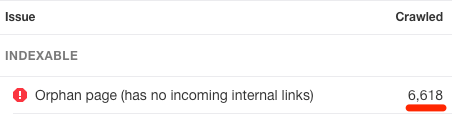

5) Check that the page isn't orphaned

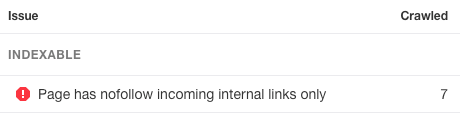

half-dozen) Fix nofollow internal links

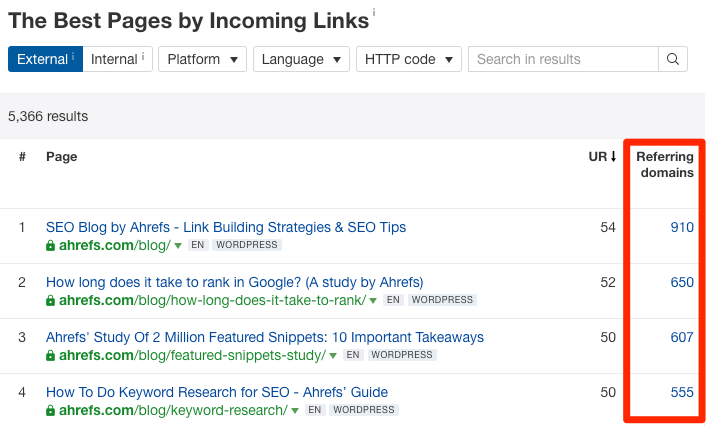

7) Add "powerful" internal links

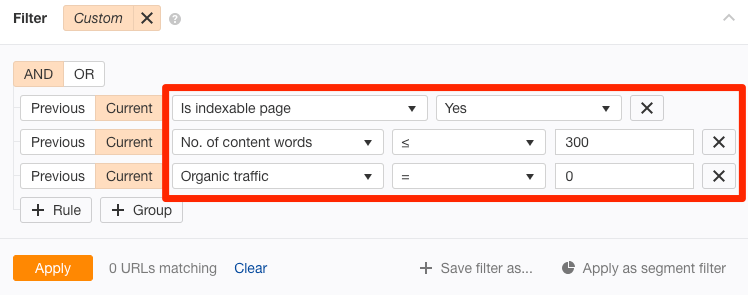

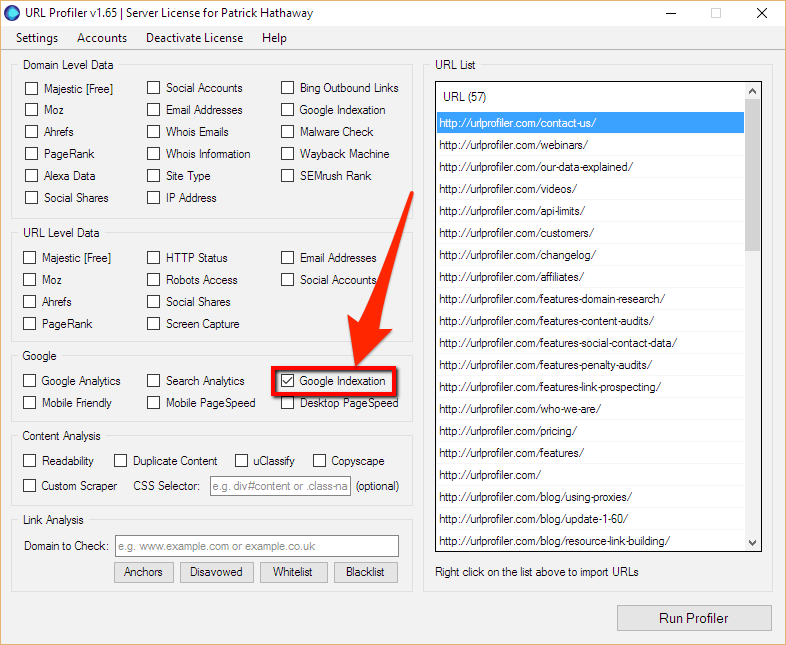

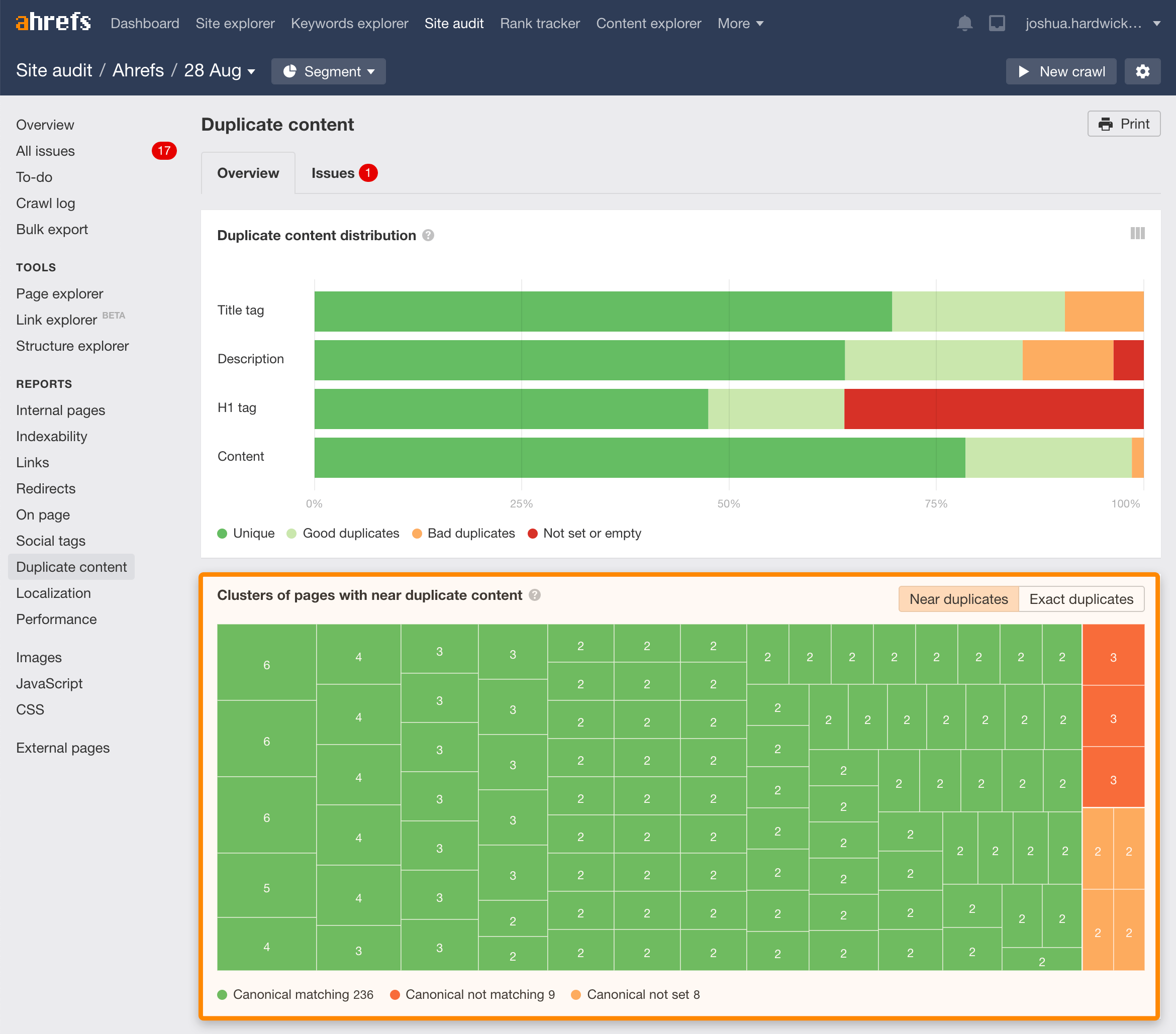

eight) Brand certain the page is valuable and unique

ix) Remove depression-quality pages (to optimize "crawl budget")

10) Build loftier-quality backlinks

Indexing ≠ ranking

Final thoughts

Source: https://ahrefs.com/blog/google-index/

0 Response to "How to Get Google to Crawl My Website Again"

Post a Comment